Author: Cody Berra

Title: Senior Solutions Consultant, UptimeAI

This article first appeared on Cody Berra’s Linkedin profile.

Spending six-figure dollar amounts on annual model maintenance just to make sure your model isn’t performing worse than it was yesterday?

It’s time for your population of critical equipment models to stop surviving and start thriving.

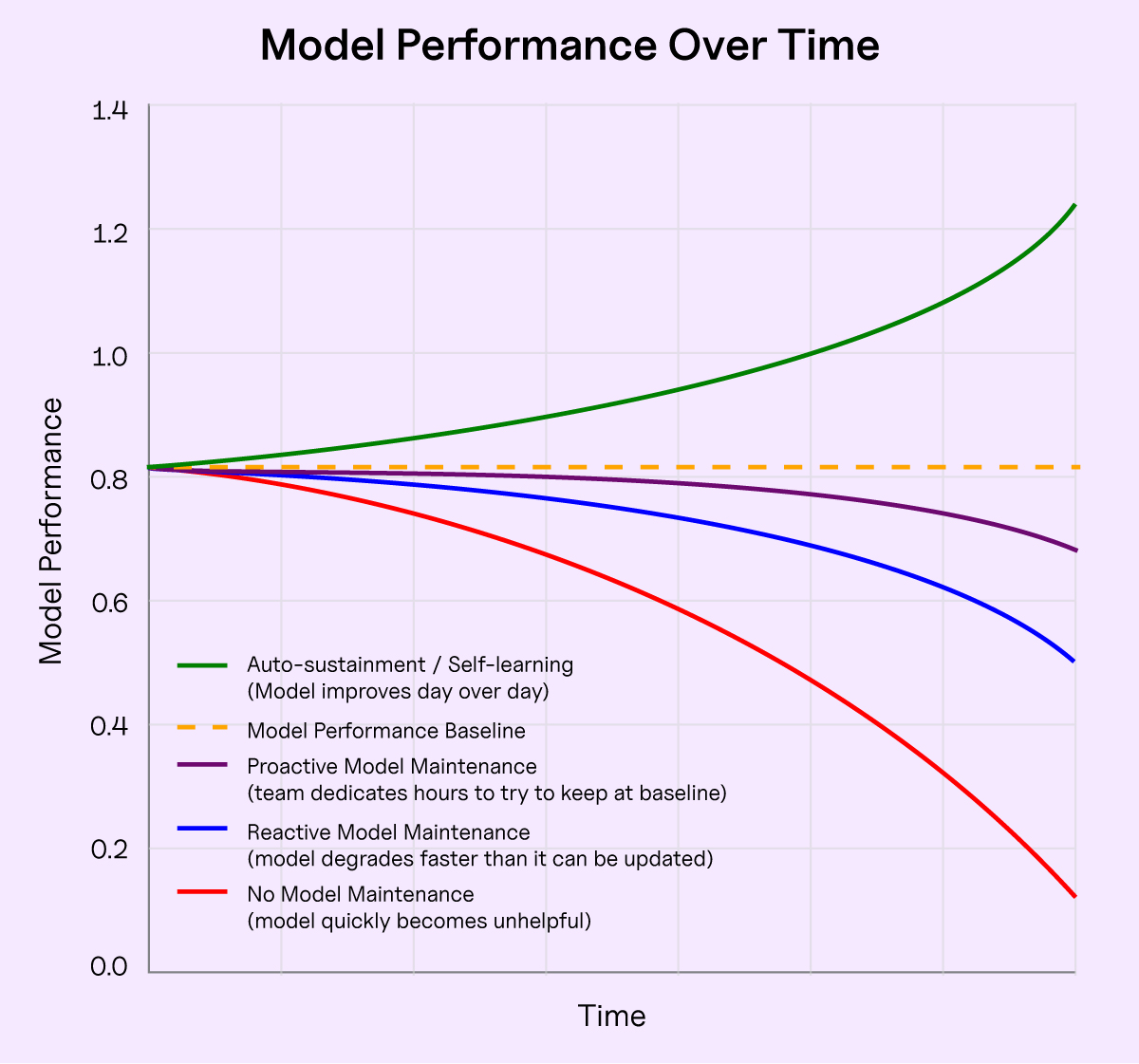

There was a time not too long ago when deploying a predictive model on a piece of critical process machinery was like buying a car:the model was never going to be as valuable as it was before you drove it off the lot. That’s because most models are built from a snapshot in time. Updating that snapshot to capture the nuances of current operating conditions—and ensuring thresholds are still set appropriately so you don’t over- or under-alert and erode operator trust—was a full-time job.

Having spent over half a decade working in GE’s Monitoring & Diagnostics (M&D) Center, I did more than my share of model maintenance for customers. We monitored incoming alerts and made tweaks to the underlying models when necessary to keep the alerts useful. At least 50% of my time was spent on the latter, meaning less time to sift through my customers’ alerts and catch anomalies that would lead to significant events. Our goal was always to keep the model performing as well as it was yesterday. We didn’t have the time or the resources to think about how we could make the model better day over day.

The Brutal Reality of Model Maintenance

The financial burden of requiring an FTE to maintain a

software solution (or to outsource it to the vendor or a

vendor partner) meant that adoption of advanced

predictive models was often limited to the largest

companies, like oil and gas or regulated utilities. Even

within large companies, deployment of these technologies

was typically reserved for just the top 5–10% of critical

rotating equipment.

Monitoring large, expensive, single points of failure in the

system was a victory for the first generation of predictive

analytics solutions. But it didn’t take long to realize that

monitoring them in isolation not only neglected other

equipment components that could take the unit down, it

also overlooked system-level effects that impacted how

the critical equipment was operating.

Degradation Takes Many Forms

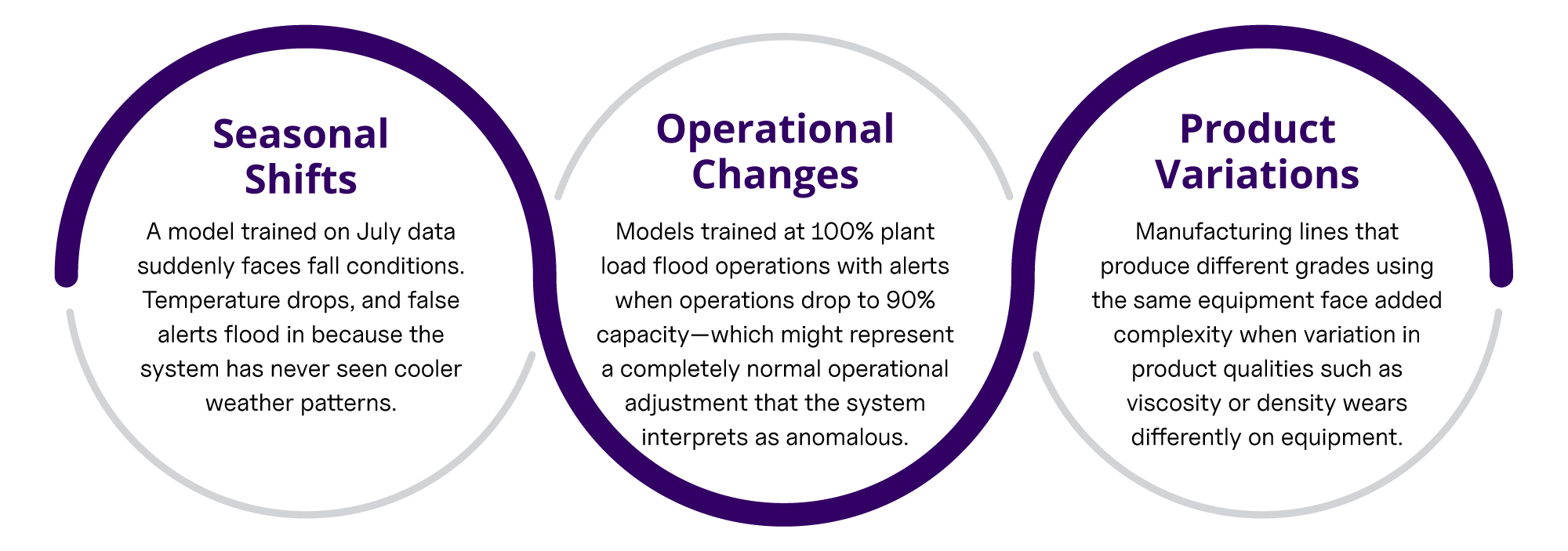

Overcoming the model-maintenance hurdles of first-generation predictive analytics technologies takes an understanding of what types of behaviors in a plant cause a model to degrade. Some common scenarios include:

When the models degrade, alerts rise exponentially. This initiates a reactive maintenance cycle where engineers aren’t optimizing for better insights or improved predictions—they’re optimizing for alert volume, trying to get it to a point where they can get through all the alerts in a week.

The Human Error Effect

Process and operations experts know the types of scenarios that can impact prediction

quality and use that expertise to inform when model maintenance might be required. But

relying on humans to both identify the need and make the updates introduces another

layer of vulnerability. Even within the M&D Center, different engineers had different risk tolerances.

One might widen alert boundaries to 10 degrees above normal, while another would choose 7.

These static numbers become permanent fixtures in the system, creating inconsistent

monitoring standards across equipment and sites.

Expertise Meets Modern Computing & AI

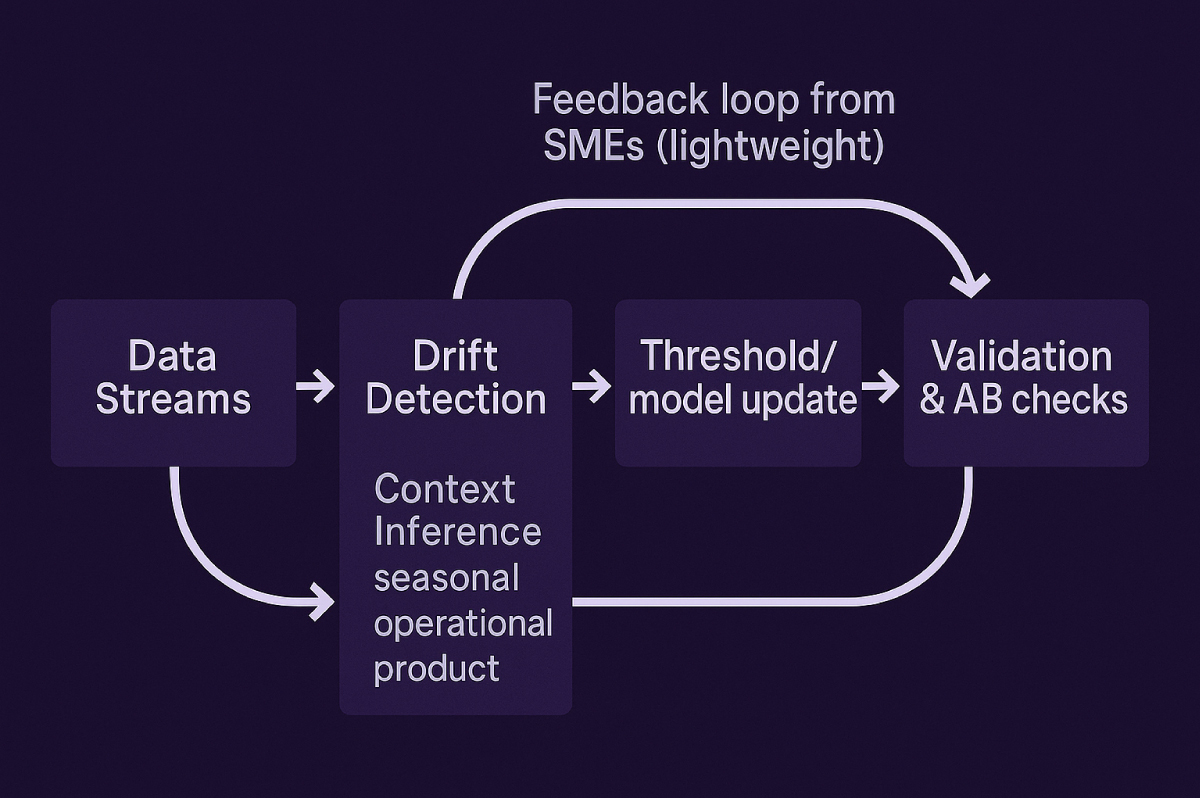

We want the thought process of the domain expert without the risk of human error. We need to know when a model update is needed and what additional context to incorporate in the update. Leveraging recent advancements in artificial intelligence and cloud computing, UptimeAI is proving to customers that you can, in fact, automate model sustainment.

When the annual model-maintenance burden is alleviated, coverage can be expanded from just the centerline equipment to the entire unit or plant. This increased contextualization unlocks insights between upstream and downstream equipment—performance and reliability insights that were previously unattainable. The wider aperture has translated into a 90% reduction in false alarms compared to conventional predictive analytics solutions. Automated model optimization also removes the barrier to entry for small to mid-size operators who previously struggled to operationalize a predictive maintenance program.

The previous generation of predictive analytics solutions stopped at anomaly detection, relying on subject-matter experts (SMEs) to interpret the alerts. UptimeAI has embedded private, agentic LLMs into the alert interface to diagnose and summarize the best available corrective actions, coupling problem identification with an 8x faster resolution.

With the reduction in false alerts, your most valuable SMEs are freed up to focus on process improvements. That six-figure annual M&D spend could be the next great debottlenecking project.