Author: Cody Berra

Title: Senior Solutions Consultant at UptimeAI

The question isn’t “Will you implement AI?”, it’s “Will you be able to trust it when it matters most?”

Every industrial leader already knows that AI is coming. In fact, most have already begun investing in it, as AI accounts for 30% of all new IT budget increases in 2025. The bigger question now isn’t whether to use AI, it’s whether you can trust it when the stakes are high. In heavy-asset industries like power generation, oil & gas, chemicals, and cement, a wrong recommendation from a “black box” AI can do more damage than no recommendation at all.

This risk is colliding with another long-brewing challenge: the loss of institutional knowledge as the most experienced workers retire. According to McKinsey, the proportion of manufacturing employees over 55 has more than doubled since 1995, and The Manufacturing Institute reports that 78% of companies are deeply concerned about the pending exodus. It’s not just a matter of filling seats; critical operational knowledge is walking out the door, often without being documented. “The single biggest factor that’s preventing manufacturers from growing is the lack of an available and skilled workforce,” said Paul Lavoie, Connecticut’s chief manufacturing officer, in an interview.

The Knowledge Crisis That’s Already Here

Consider the story of Dave Smith, a maintenance superintendent at a petrochemical facility who retired after 30 years. His replacement quickly learned that the real job description wasn’t in any manual. Within weeks, the plant was calling Smith at home for advice on problems that, to him, were second nature. Eventually, they brought him back as a consultant just to keep things running. Multiply this by hundreds of facilities across the country, and you start to see the scale of the challenge.

This kind of tacit knowledge, like knowing which circuit to check first when a motor trips, recognizing the sound of a bearing on its way out, or understanding why a particular adjustment works differently in humid weather, takes decades to build. Once it’s gone, it can’t be replaced overnight.

The urgency to get this right is underscored by the scale of the knowledge loss problem. Poor knowledge transfer costs large companies an estimated $47 million each year. And in a survey of 1,500 retiring baby boomers, 57% said they shared less than half their job knowledge before leaving, while 21% admitted they shared virtually nothing at all. This isn’t just losing people; it’s haemorrhaging hard-earned, experience-driven insight that took decades to develop after encountering multiple ambiguous operational challenges.

The Black Box Problem in Industrial AI

The natural response is to look to AI to capture and scale this expertise before it disappears. But here’s the catch: in many plants, the AI being sold today can’t explain its reasoning. Companies struggle to map data to real maintenance decisions and to embed the alert predictions into site operations, making it harder to apply modern approaches like predictive maintenance effectively.

The result: it produces alerts without context, probabilities without cause, and recommendations without justification. In other words, it’s a black box. And when operators can’t see why the AI is making a call, they don’t trust it—and they won’t use it.

The hype hasn’t helped. Over the past few years, “AI washing” has become common., Every industrial software vendor now seems to have an AI story, whether their technology fits the definition. Many of these tools are little more than threshold alarms with a new marketing label. They can detect that something is wrong, but can’t tell you what, why, or how to fix it. In safety-critical environments, this lack of transparency is more than an inconvenience—it’s a liability.

This concern isn’t unfounded. The Federal Trade Commission has warned about misleading or unsubstantiated AI claims, and ISACA research shows that poorly implemented AI can produce confidently incorrect recommendations. In critical environments, a wrong suggestion isn’t just inconvenient; it can cause unplanned downtime, safety incidents, or even regulatory violations. The lesson is clear: without explainability and domain grounding, AI becomes a liability instead of an asset.

Why Explainability Changes Everything

That’s why explainability and traceability are now the defining traits of industrial AI worth deploying. When AI can show its reasoning, it becomes a partner rather than an oracle. It also becomes a powerful tool for bridging the generational knowledge gap, helping new hires understand why a corrective action is needed, just like a veteran mentor would.

Take a real-world example from a power plant. The AI Expert triggered an alert on high vibration for Feedwater Pump 3. Instead of simply stating “vibration high,” it pulled together vibration, flow, and suction pressure data from the historian, cross-referenced recent work orders, scanned past RCA reports for similar events, and even noted an operator shift log mentioning “startup vibration and belt squeal” from two days earlier. The system then explained:

“This vibration pattern matches a prior event from August 2023 caused by a loose baseplate and misalignment after a seal replacement. Flow is steady, but suction pressure is down 6%, and motor current is elevated, consistent with partial cavitation risk.

Recommend inspection of foundation bolts and pump alignment with draft work order attached.“

That’s not just an alert, it’s a reasoning chain. The operator can see the data inputs, the historical context, and the logic connecting the dots. It’s the kind of analysis an experienced engineer like Dave Smith would provide, except it’s available instantly, at any hour, and for every employee on shift.

This is the essence of explainable AI: transparency that builds trust. It preserves institutional knowledge, accelerates decision-making, and enables newer staff to learn on the job. And because the reasoning is visible, it can be challenged, confirmed, and refined—making the AI smarter over time.

What Trustworthy Industrial AI Looks Like

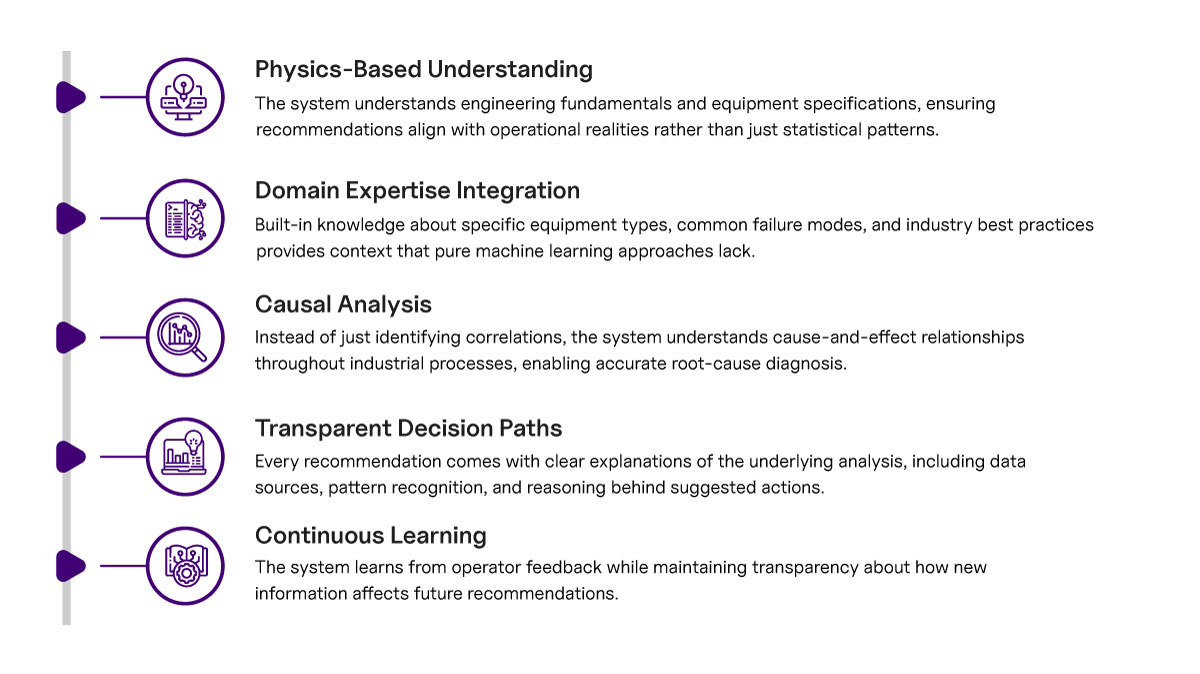

The kind of AI that solves these problems isn’t just machine learning in a vacuum. It’s a blend of physics-based modelling, statistical learning, and embedded domain expertise that understands equipment types, failure modes, and the causal relationships between assets. When performance issues arise, it can trace the chain of events, assess upstream and downstream impacts, and deliver not only a likely cause but also the rationale behind it—much like an experienced maintenance supervisor would do in real life.

Effective industrial AI must incorporate five key elements:

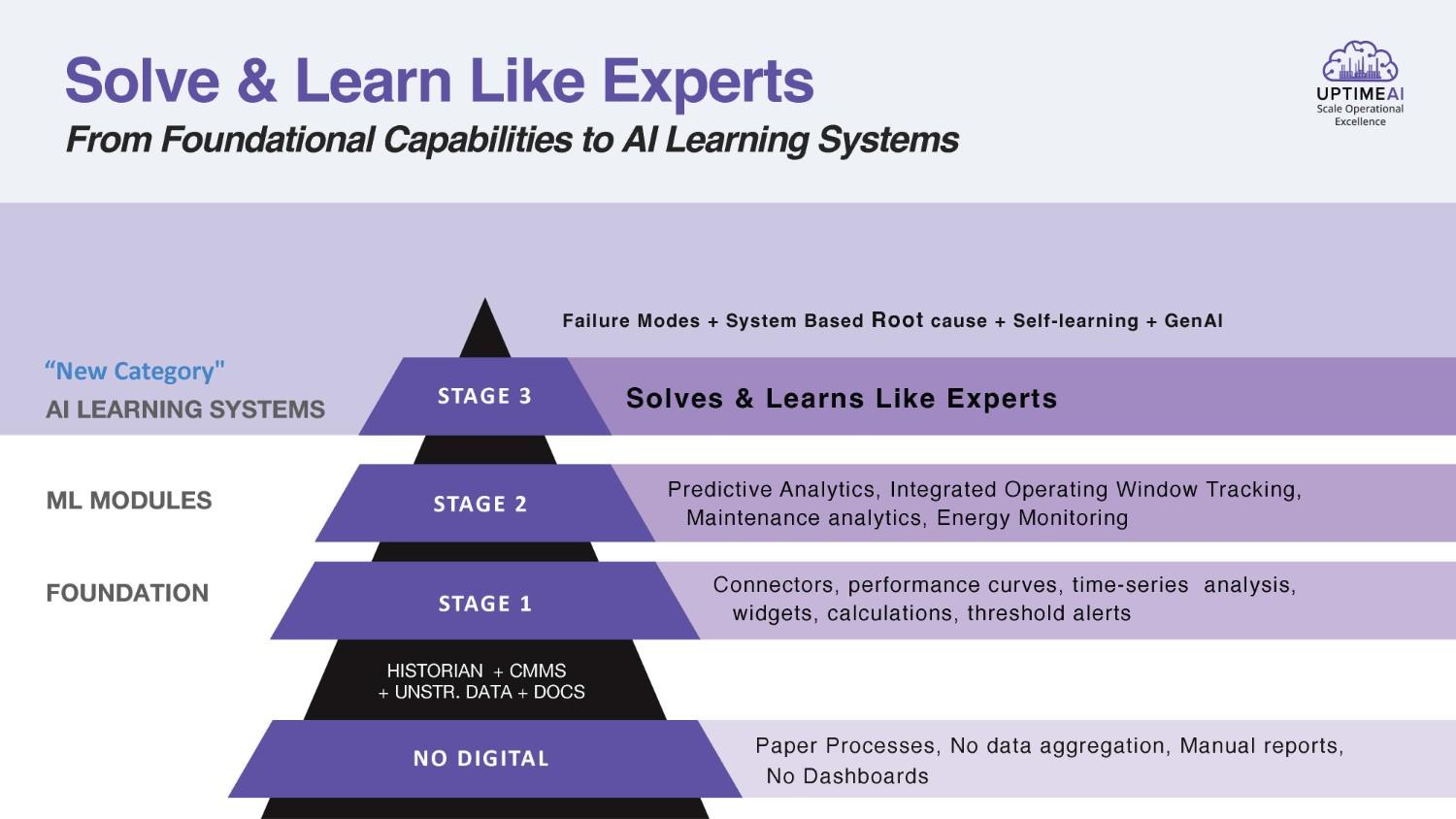

The UptimeAI Approach: Comprehensive Coverage with Complete Transparency

At UptimeAI, this philosophy has shaped our entire approach to AI in industrial operations. We don’t believe in partial coverage or “AI-lite” solutions that monitor a small fraction of the plant. Our AI Expert monitors 100% of assets in a facility, utilising our patented System Model approach, which surpasses the 10–20% coverage typically offered by competitors. According to Frost & Sullivan analysis, this comprehensive coverage ensures no blind spots. If an engineer asks about a motor, turbine, exchanger, or pump, the system already has the data, the history, and the context.

The foundation of this capability is embedded domain knowledge: more than 120 equipment types and over 500 defined failure modes are built directly into the system. This isn’t just generic machine learning—it’s AI informed by the same kinds of engineering principles and process relationships a seasoned operator would apply. When a performance issue emerges, the AI can understand upstream and downstream impacts, recognize known failure patterns, and connect them to prescriptive, context-rich recommendations.

And the learning doesn’t stop there. Every time an engineer confirms or refines a diagnosis, that feedback is captured and incorporated. Over time, AI Expert becomes more attuned to the unique operating conditions, equipment quirks, and process characteristics of that specific plant. It’s a living knowledge base that grows stronger with every interaction, much like a human expert who gets better the more time they spend on the job.

Measurable Results from Trustworthy AI

The impact of this approach is measurable. Customers see a 10–15X ROI within nine months, driven by avoided failures, reduced maintenance costs, and increased availability. Nine out of ten customers choose to scale UptimeAI across all sites within the first year. And perhaps most tellingly, the adoption curve is steep not because leadership mandates it, but because operators trust it. They trust it because they can see its reasoning, validate its conclusions, and learn from its explanations.

Ready to see explainable AI in action? Contact us to discover how UptimeAI’s approach can transform your operations while preserving critical knowledge.

The Future Belongs to Explainable AI

The plants that succeed in the coming years will not just “use AI.” They’ll use AI they can trust. They’ll demand traceability in every recommendation, ensuring that when a critical decision needs to be made, the “why” is as clear as the “what.” In doing so, they’ll turn AI from a risky black box into a trusted advisor—one capable of carrying the wisdom of retiring experts forward to the next generation.

The retirement wave isn’t slowing down. The AI market isn’t getting any quieter. The differentiator will be the ability to combine explainable AI with systematic knowledge capture—to make every recommendation not just accurate, but understandable.

In the end, the goal isn’t to replace human expertise with AI. It’s to preserve it, scale it, and make it available to every operator, every shift, in every plant. Because when AI can explain itself, people will trust it. And when people trust it, they’ll use it. And when they use it, the whole operation gets better.

That’s the path from black box to trusted advisor—and it’s the only path worth taking.

Take Action: Build Trustworthy AI for Your Operations

The knowledge exodus is happening now, but you don’t have to face it unprepared. UptimeAI’s explainable AI platform helps industrial operations preserve critical expertise while building the trust necessary for successful AI adoption.

Ready to transform your approach to industrial AI?

Don’t wait until your most experienced operators retire. Start building trustworthy AI systems that preserve knowledge, enhance decision-making, and create sustainable competitive advantages today.