Author: Jagadish Gattu

Title: CEO, UptimeAI

In our previous exploration of explainable AI in heavy industry, we established that transparency and trust are foundational to successful AI adoption in critical operations. As industrial AI deployment accelerates, a more complex challenge has emerged: intelligently reasoning across conflicting data sources.

The question is evolving from “Can you explain your recommendation?” to “How do you handle dissonance between work orders and Root Cause Analysis (RCA) reports from different time periods and system configurations?”

The Multi-Source Intelligence Challenge

During a technical discussion with a power generation company, this question emerged:

“How do you handle dissonance between work orders and RCA reports? There could have been an RCA on a similar event five years ago where the root cause was X, but the actual composition of that system was different.”

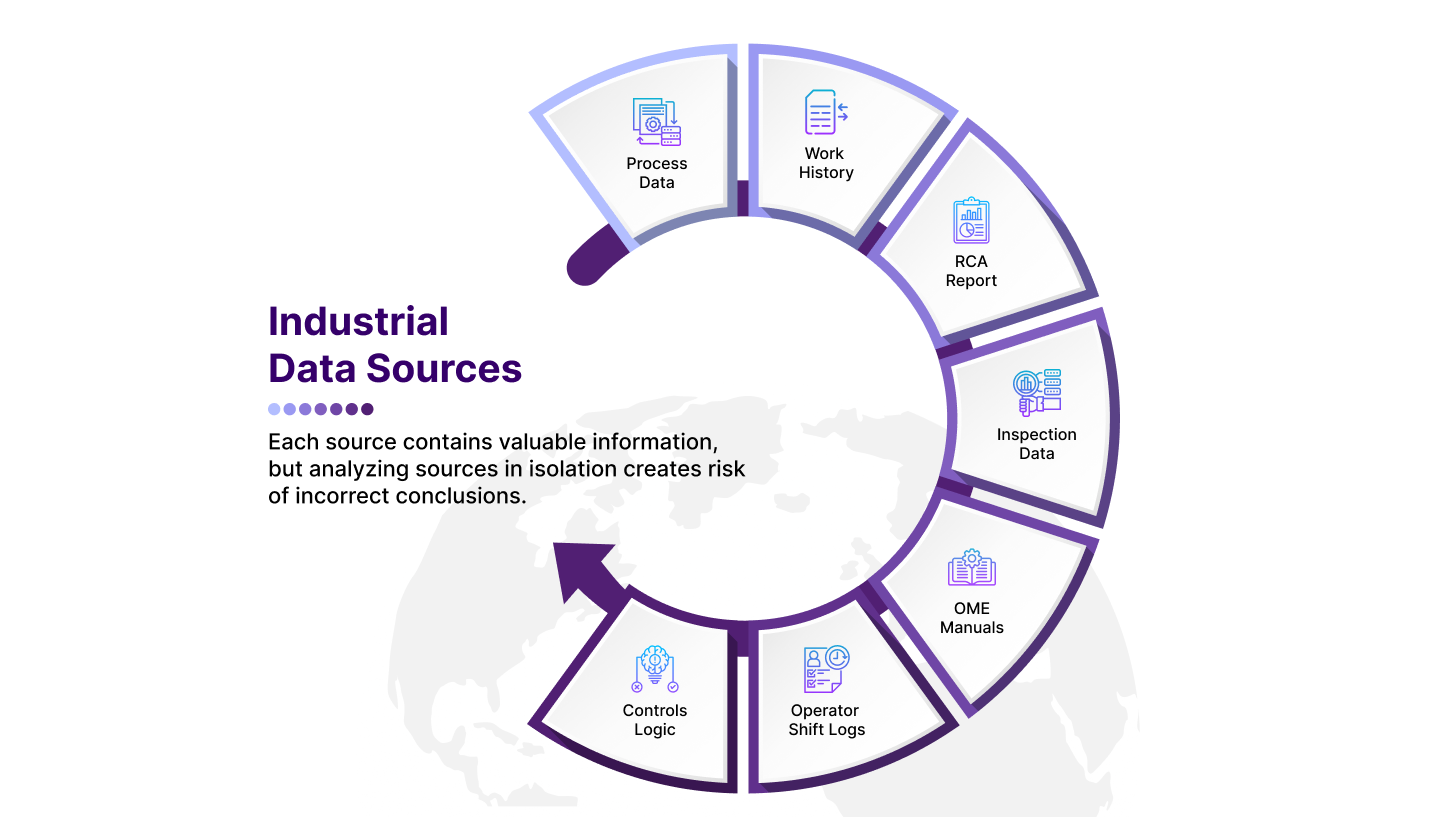

This question highlights a critical challenge. With Industrial AI having evolved from statistical analysis of time series data to LLM interpretation of unstructured data sources, successful models must now reason across:

-

Real-time sensor data from process historians

-

Historical maintenance records with varying quality and context

-

Root cause analysis reports from different system configurations

-

Operating manuals and OEM documentation

-

Work order histories with incomplete information

-

Equipment metadata and asset hierarchies

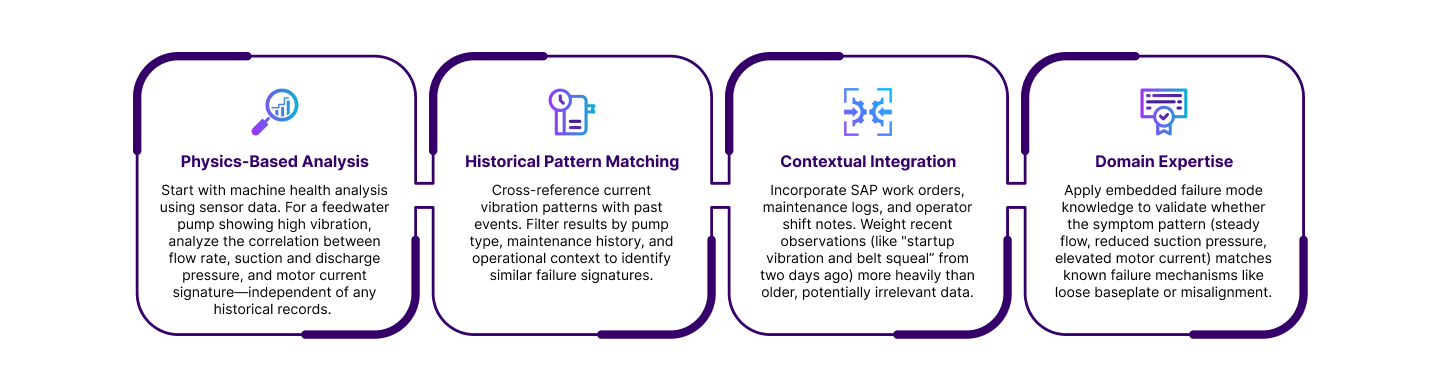

Multi-Layer Verification: AI Rooted in Expert Behavior

Getting to the most robust answer requires treating each information source as an independent verification layer rather than choosing between data sources. This approach follows the same logical workflows a plant expert would take when investigating an issue. For example, an issue with vibration in a feedwater pump might involve the following activities.

This layered approach has helped SMEs conclude whether current symptoms match prior experience, while accounting for the specific operational context and recent maintenance activities. Applying this to AI workflows ensures that even when historical data contains inconsistencies, analysis remains grounded in current physical reality.

Beyond Sensor Based Monitoring

While legacy systems excel at detecting statistical deviations in time series data, the next generation leverages technological advancements in system level analysis, self-learning, and interpretation of unstructured data to deliver the complete picture, quickly and without the potential for human error.

Consider the feedwater pump example. Advanced multi-source intelligence:

- Analyzes the physics: correlates vibration with flow, pressure, and current

- Searches maintenance history, filtering by pump type and context

- Reviews inspection records and recent maintenance activities

- Scans operational logs for relevant observations

- Maps symptom patterns to known failure mechanisms

- Reasons across sources, weighing evidence quality

Leveraging a sophisticated orchestration of specialized agents for multi-source intelligence provides a reasoned diagnosis that synthesizes information streams while accounting for conflicts or missing data, maintaining explainability about both findings and conflict resolution methods.

Measuring Efficacy in Multi-Source Systems

Another key question we hear from industry is: “How do you measure the efficacy of your agents? Do you employ recall and precision metrics?”

Advanced systems incorporate multiple validation approaches across the model lifecycle:

-

Historical Validation: Often done before deployment, back-testing against known failure scenarios where root causes were definitively determined.

-

Continuous Feedback: Once live, every user interaction feeds model optimization, creating self-improving accuracy.

-

Operational Metrics: Tracking real-world outcomes—did recommended actions resolve issues? How often did investigations confirm root cause hypothesis?

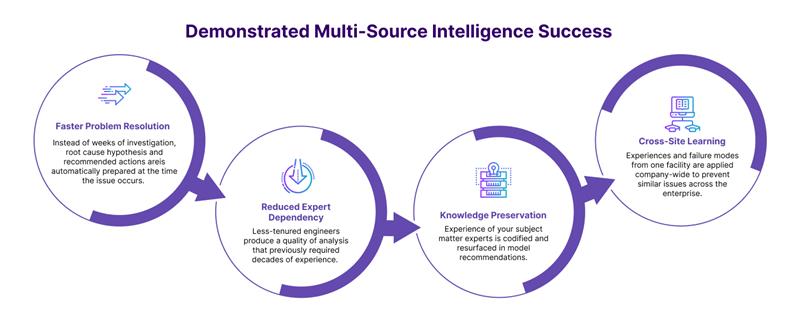

Organizations that master multi-source intelligence have gained sustainable competitive advantages

Ahead on the Horizon

The trajectory toward more sophisticated multi-source intelligence will continue to evolve as diverse data sources become more interpretable and commonplace. In addition to document, drawing and image analysis, we anticipate real-time computer vision will provide an additional verification layer. The ever-growing stores of historical data combined with new, additional context will pave the way for autonomous optimization—systems that don’t just diagnose problems but continuously optimize operations for efficiency and reliability. The organizations that thrive in this evolution will be those that embrace multi-source intelligence not as a replacement for human expertise, but as an amplifier of it—providing every operator with access to comprehensive, reasoned analysis that considers all available evidence.

The Path Forward

Industrial AI technology has surpassed simple alerting and threshold monitoring for critical equipment. Uptime and efficiency gains of modern technologies are elevating organizations that successfully harness multi-source intelligence to interpret and act on the complexity and ambiguity of real-world operations. Improvements in AI explainability, like the ability to reason across all your data sources while remaining transparent about how it reaches its conclusions, will only accelerate adoption. In the end, the most successful industrial AI won’t just explain its reasoning—it will demonstrate how it intelligently navigated conflicting information, weighed evidence quality, and arrived at the most reliable answer possible given all available data. It will build trust in the same way humans do—by being curious, comprehensive, and proven.